In the previous article in our Marketing on Facebook series, we looked at how to build a robust A/B scope of test framework to help uncover optimal relevance and ROAS. In our third and final post, we analyze the results of our test and formulate an action plan. (Be sure to refer back to the previous article for a refresher.)

Summary of Insights

Remember, we’re working with a retail advertiser’s scope of test. The retail advertiser is using Conversion and Product Catalog sales objectives. They’ve set a goal to optimize their campaigns, with the broader challenge to drive ROAS improvements.

Let’s don the hat of a Marin Customer Engagement Manager. Reviewing performance insights, we can formulate our summary:

- Men and women display consistently different trends in purchase behaviors—men have higher conversion rates, but cost more per conversion and produce lower revenue; women produce higher revenue, but have lower conversion rates.

- Instagram posted consistently higher ROAS versus other placements.

Summary of Opportunities for Optimization

In response, we note several opportunities for optimization:

- Testing men and women targeted together versus. segmented

- Testing placement optimized ad sets including Instagram versus segmenting Instagram

- Testing optimizing to standard pixel events versus. Custom Conversion

- Testing more refined lookback windows for seed audiences

- Testing more refined lookback windows for dynamic ads

Summary of Scope of Test

Because age, gender, and in many cases the location often have a significant impact on results, the gender A/B test weighted higher in importance for Phase 1, versus testing placement optimization. Additionally, this was a priority for the advertiser at the time because they were also thinking about a more gender-tailored approach to creative design.

We’ve noted that in setting up ad studies, a clear definition of success is very important for successful learnings, so be sure to define KPIs. We elected ‘overall campaign performance’ as the measured goal for our scope of test, and also noted improvements for our KPIs of Relevance Score and ROAS.

Let’s look at some of the highlights that the example scope of test produced.

Phase 1: Testing Gender Optimized Ad Set Versus Unique Ad Sets for Men and Women

Background: A/B test campaign targeting men and women together in an ad set, versus a campaign targeting men and women in unique ad sets. Winner is plugged into Phase 2.

Theory: Optimized ad sets—whether combining placement or genders—allow the Facebook auction algorithm to find the most opportunities from the defined audience pool. When we target women uniquely, do we see higher ROAS?

Test Results

Including men and women in the same ad set can work better in some cases. This is because the auction algorithm has more options in effectively placing impression opportunities for results (placing an ad impression in front of the person most likely to take action X), for the most efficient price.

In other cases, we need segmentation to better refine the audience versus the goal—to make it more relevant.

Learnings: The campaign segmenting men and women improved Relevance Scores by two points, and improved ROAS by 18%. Creating segmentation in the audience—limiting and refining its overall scope—helped generate a more relevant targeting pool. Because more relevant ads are more cost efficient, we saw improved ROAS in correlation with higher Relevance Scores.

Highlights: Looking at the ad set targeting men only, we saw that the Relevance Score and ROAS were about equal to that of the campaign ad set that combined gender targeting. However, the ad set targeting women only posted significantly higher Relevance Scores and ROAS. While men remained an overall difficult and more expensive conversion, the more focused and relevant ad set targeting women was able to efficiently serve impressions, and generate conversions and revenue.

Conclusion: Overall, the campaign segmenting gender targeting produced better results.

Targeting in this way achieved not only a more relevant—but also a more positive—user experience. The auction produced more value for our advertising outcomes, reaching people who mattered most to specific goals. As a result, we gained improved ROI.

Additional Insights

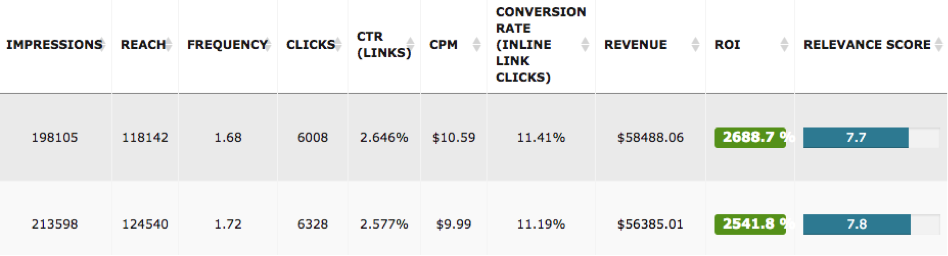

It’s possible for a budget to be spent faster yet win less auction impressions. Why? Because of low relevance. For comparison, the two campaigns with equal overall audiences produced the following insights in Round 1:

CPM (Campaign A, Segmented Genders): $26.16

CPM (Campaign B, Combined Genders): $29.66

For the same budget of $1,500, Campaign B produced 6,776 fewer impressions, and despite a higher conversion rate (52.94% vs. 49.89%), produced 18% less revenue.

In all, the ‘less relevant campaign’ produced less impressions, less clicks, higher overall CPAs, and lower revenue.

With similar results in Round 2, we were able to prove that fostering more relevance in our targeting, ads in the auction produced more results at higher overall campaign ROAS in response.

Marin Tip: Relevance Score can be a powerful tool in your campaign management. Look for it in the Marin Social dashboard at the campaign, ad set, and ad level:

With the above results, you can also test unique creatives to men and women in the future, to see if you can further optimize with a more tailored message or image/video.

Phase 2: Test Placement Optimization Versus Segmenting Instagram

A/B test campaign utilizing Placement Optimization (including Phase 1 winner: men and women segmented), versus a campaign utilizing Placement Optimization except for Instagram, which is a unique ad set. With noted insights that Instagram produces higher ROAS, can a controlled budget via a dedicated ad set improve overall campaign ROAS?

Winner is plugged into Phase 3.

Test Results

Learnings: Segmenting Instagram into a unique placement and providing for controlled budget produced a 4% improvement in ROAS overall. We noted a slight uptick in Relevance Score, (under 1 point).

Highlights: While Instagram continued to post performance gains in a dedicated at set, we saw smaller overall gains than we hoped for. We noticed that the ad set utilizing all other placement options without Instagram performed markedly worse than when we included Instagram.

In the ‘overall’ performance gains overview, Instagram carried the campaign’s success. We planned further testing to produce a placement-optimized ad set.

While not as impactful as we’d hoped, Phase 2 created an opportunity to build a follow-up scope of test to understand the best combination of placements. Just because our results don’t post a clear winner doesn’t necessarily mean a failed ad study. We should view such results as additional opportunities to test and refine our strategy.

Phase 3 and Beyond

Additional testing found that building Lookalike Audiences from Custom Audiences that used more narrow lookback windows (10days was best) helped improve Relevance Scores. In addition, on average, it produced 11% gains in ROAS, versus campaigns that used Lookalikes based on a 180-day lookback window seed Custom Audience.

We uncovered similar patterns for dynamic retargeting ads—using more tiers, plus more narrow windows in those tiers, proved most optimal from a ROAS perspective. There was no significant impact to Relevance Scores.

Dynamic retargeting tiers we found most successful were:

- Added to Cart but not Purchased in 3 days

- Added to Cart but not Purchased in 10 days, not Added to Cart in 3 days

- Viewed Content but not Added to Cart in 7 days

- Viewed Content but not Added to Cart in 14 days, not Viewed Content in 7 days

- Viewed Content but not Added to Cart in 30 days, not Viewed Content in 14 days

We also determined that using a Custom Conversion produced worse overall results, versus using a pixel event to track conversions. When using the pixel event, for example, our Relevance Scores were on average 1.2 points higher than when we used a Custom Conversion. Also, we also improved our ROAS when we used the pixel event.

Final Thoughts

Relevancy and quality can help advertisers achieve efficiency gains in assigned budgets on Facebook. However, they’re often overlooked in favor of bid and budget adjustments.

While bid changes and budget adjustments hold value in optimizing campaigns, Relevance Score—and overall the relevance concept in the auction as described within the total bid—is one of the key drivers of performance efficiency.

Time after time we’ve noticed that campaigns with low Relevance Scores perform worse when compared with campaigns posting a higher Relevance Score. In these cases, increasing the bid value or switching to Auto Bid doesn’t typically improve acquisition costs or revenue.

In a lot of cases, campaigns with low Relevance Scores also under-deliver to allocated budgets.

Finally, to achieve efficiency gains, you need a carefully outlined approach that incorporates an understanding of the baseline, plus measured steps to test improvements to this baseline.

Refining Your Campaign Strategy

If you’d like to partner on projects similar to the one we’ve described here, contact your Customer Engagement Manager and they’ll gladly schedule a time to review your campaign strategy. They can also help model and support your progress through a scope of test. Or, if you’re new to Marin, feel free to get in touch.